|

AI Is "Sucking Up" Global Electricity? And the Scarier Part Is Yet to ComeTime:2024-12-30 By:ChipSpark technology In recent years, the rise of artificial intelligence (AI) has sparked extensive discussions and concerns. Many people worry that AI will cause a sharp increase in the unemployment rate, while some optimistic friends jokingly say, "As long as the electricity bill is more expensive than steamed buns, AI will never be able to completely replace humans." Although this is just a joke, behind it lies the real issue of AI's energy consumption, and more and more people are worried that high energy consumption will become a bottleneck restricting the development of AI. Just recently, Kyle Corbitt, a tech entrepreneur and former Google engineer, stated on social media X that Microsoft has encountered this challenge.

How much power does AI really consume? Corbitt said that the Microsoft engineers training GPT-6 are busy building an IB network (InfiniBand) to connect the GPUs distributed in different regions. This task is very difficult, but they have no choice because if more than 100,000 H100 chips are deployed in the same region, the power grid will collapse. Why would the concentration of these chips lead to the consequence of a power grid collapse? Let's do a simple calculation.

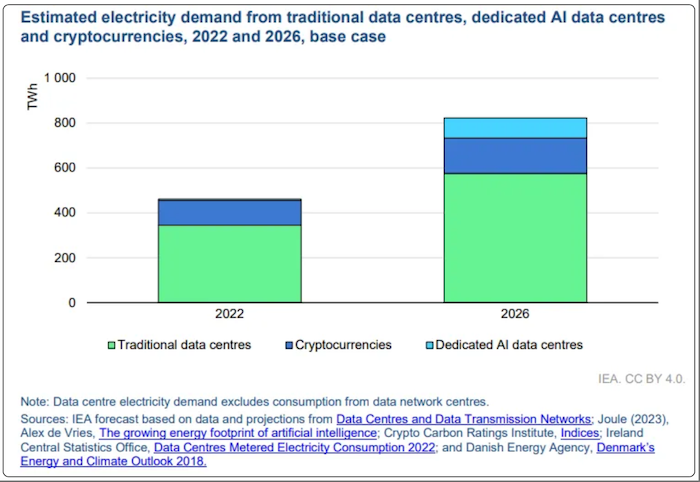

The data published on NVIDIA's website shows that the peak power of each H100 chip is 700W, and the peak power consumption of 100,000 H100 chips can reach up to 70 million W. Moreover, a professional in the energy industry in the X comment area pointed out that the total energy consumption of 100,000 chips would be equivalent to the entire output of a small solar or wind power plant. In addition, one must also consider the energy consumption of so many supporting facilities for the chips, including servers and cooling equipment. It is conceivable that the pressure on the power grid brought by so many power-consuming facilities concentrated in a small area. AI Power Consumption: Just the Tip of the Iceberg Regarding the issue of AI's energy consumption, a report by The New Yorker once attracted widespread attention. The report estimated that ChatGPT's daily power consumption may exceed 500,000 kilowatt-hours. (See: ChatGPT's Daily Power Consumption Exceeds 500,000 Degrees, and the Bottleneck that Blocks AI's Development Is Actually Energy?) In fact, although the current power consumption of AI seems like an astronomical figure, it is still far behind that of cryptocurrencies and traditional data centers. The problems encountered by Microsoft engineers also indicate that what restricts the development of AI is not only the energy consumption of the technology itself, but also that of the supporting infrastructure, as well as the carrying capacity of the power grid. A report released by the International Energy Agency (IEA) shows that in 2022, the power consumption of global data centers, artificial intelligence, and cryptocurrencies reached 460 terawatt-hours (TWh), accounting for nearly 2% of global energy consumption. The IEA predicts that in the worst-case scenario, by 2026, the electricity consumption in these fields will reach 1,000 TWh, equivalent to the entire electricity consumption of Japan. However, the report also shows that currently, the energy consumption directly invested in AI research and development is much lower than that of data centers and cryptocurrencies. NVIDIA occupies approximately 95% of the market share in the AI server market, supplying about 100,000 chips in 2023, with an annual power consumption of approximately 7.3 TWh. But in 2022, the energy consumption of cryptocurrencies was 110 TWh, equivalent to the entire electricity consumption of the Netherlands.

Cooling Energy Consumption: Not to Be Ignored The energy efficiency of data centers is usually evaluated by the power usage effectiveness (PUE), which is the ratio of all energy consumed to the energy consumed by the IT load. The closer the PUE is to 1, the less energy is wasted by the data center. The report released by the Uptime Institute, a standard organization for data centers, shows that the average PUE of large data centers worldwide in 2020 was approximately 1.59. That is to say, for every 1 degree of electricity consumed by the IT equipment of the data center, its supporting equipment consumes 0.59 degrees of electricity. Among the additional energy consumption of data centers, the vast majority is applied to the cooling system. A survey study shows that the energy consumed by the cooling system can reach up to 40% of the total energy consumption of the data center. In recent years, with the upgrading of chips and the increase in the power of single devices, the power density of data centers (that is, the power consumption per unit area) has been continuously increasing, putting higher requirements on heat dissipation. But at the same time, by improving the design of the data center, the waste of energy can be significantly reduced. Due to differences in cooling systems, structural designs, and other aspects, the energy efficiency ratios of different data centers vary greatly. The Uptime Institute report shows that European countries have reduced the PUE to 1.46, while in the Asia-Pacific region, more than one-tenth of data centers still have a PUE of more than 2.19. Countries around the world are taking measures to encourage data centers to achieve the goals of energy conservation and emission reduction. Among them, the European Union requires large data centers to set up waste heat recovery equipment; the US government is investing in the research and development of more energy-efficient semiconductors; and the Chinese government has also introduced measures, requiring data centers to have a PUE of no more than 1.3 from 2025, and to increase the proportion of renewable energy usage year by year, reaching 100% by 2032. Power Consumption by Technology Companies: Difficulties in Saving and Generating With the development of cryptocurrencies and AI, the scale of data centers of various technology companies is continuously expanding. According to statistics from the International Energy Agency (IEA), in 2022, the United States had 2,700 data centers, consuming 4% of the national electricity consumption, and it is predicted that this proportion will reach 6% by 2026. With the increasingly tense land use on the east and west coasts of the United States, data centers are gradually shifting to central regions such as Iowa and Ohio, but the original industries in these second-tier regions are not well-developed, and the power supply may not be able to meet the demand. Some technology companies try to get rid of the shackles of the power grid and directly purchase electricity from small nuclear power plants, but both this power consumption method and the construction of new nuclear power plants have to face complex administrative procedures. Microsoft tries to use AI to assist in completing the application, while Google uses AI for computing task scheduling to improve the operational efficiency of the power grid and reduce corporate carbon emissions. As for when controlled nuclear fusion will be put into application, it is still an unknown. Climate Warming: Making Things Worse The R & D of AI requires stable and strong power grid support, but with the frequent occurrence of extreme weather, the power grids in many areas are becoming more vulnerable. Climate warming will lead to more frequent extreme weather events, not only causing a sharp increase in electricity demand and increasing the burden on the power grid, but also directly impacting the power grid facilities. The IEA report points out that due to the influence of drought, insufficient rainfall, and early snowmelt, the proportion of global hydropower generation dropped to the lowest level in 30 years in 2023, less than 40%. Natural gas is often regarded as a bridge in the transition to renewable energy, but it is not stable under extreme winter weather conditions. In 2021, a cold wave hit Texas in the United States, resulting in large-scale power outages, and some residents had power outages for more than 70 hours at home. One of the main reasons for this disaster is that the natural gas pipeline was frozen, causing the natural gas power plant to shut down. The North American Electric Reliability Council (NERC) predicts that from 2024 to 2028, more than 3 million people in the United States and Canada will face an increasingly high risk of power outages. To ensure energy security and at the same time achieve energy conservation and emission reduction, many countries also regard nuclear power plants as a transitional measure. At the 28th Summit of the United Nations Climate Change Commission (COP 28) held in December 2023, 22 countries signed a joint statement, committing to increasing nuclear power generation capacity to three times the 2020 level by 2050. At the same time, with China, India, and other countries vigorously promoting the construction of nuclear power, the IEA predicts that by 2025, global nuclear power generation will reach a historical high. The IEA report points out: "In the face of the changing climate pattern, it will become increasingly important to improve energy diversification, enhance the cross-regional dispatch capacity of the power grid, and adopt more resilient power generation methods." Ensuring the power grid infrastructure not only relates to the development of AI technology, but also concerns the national economy and people's livelihood. |